2020 computer vision.

Computer vision has been around since the 1950s, when primitive neural networks first managed to identify and sort simple shapes. Recent advances have been astounding, but new experiential needs are about to propel awareness, understanding and desire into the stratosphere.

This interdisciplinary scientific field deals with how computers can gain high-level understanding from digital images or videos. Computer vision works by training a system to look for certain objects via deep learning sets, so that it can suggest an item if it thinks it knows what it is. The more the system is informed, the more results it will find – think family member recognition on your iPhone.

In a world of social distancing, computer vision’s full potential is about to be realised.

It will be vital in mitigating risk and alleviating the fear of infection by empowering all manner of autonomous and contactless experiences.

2LK have always had a keen interest in emerging technology, particularly within the field of optics and next generation sensors. We’ve delivered numerous interactive experiences using Lidar cameras and 3D depth tracking technology, like our award-winning work for Intel on ‘The Wonderwall’. Add a layer of AI into these types of interactive experiences and there’s capacity to develop very sophisticated systems that learn and evolve over time.

If we rewind to January 2020, before lockdown and global pandemics, the studio was already working on object and image recognition tools that could provide longevity in the ever-changing, fast-paced world of brand experiences.

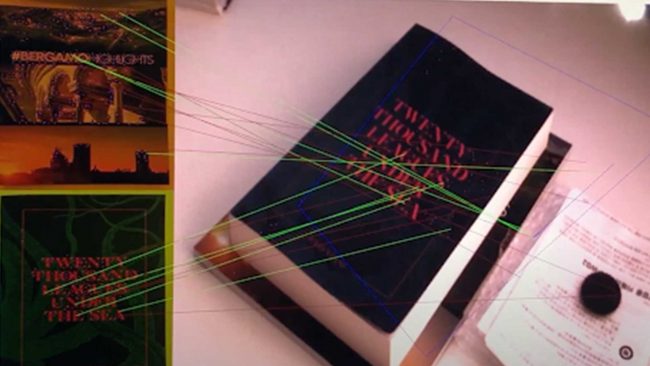

A world-leading imaging brand asked us to develop a way for people to interact with their technology autonomously, without the need to connect with staff. We devised a seemingly low-fi experience, where guests’ only tactile exchange would be with physical print samples, each one (there could be hundreds) serving as a trigger to launch digital content streams. The bespoke and intelligent software tool works by detecting and analysing what the user is holding. The whole process takes a split second and performs with great accuracy. Delivered via self-service platforms, interrogation is simple and intuitive. The whole experience feels very analogue, despite the fact that it’s driven by a super smart computer system.

(Image: Environment design, object recognition).

The result? Huge reduction of demo staffers and a much more streamlined consumer experience. Users can find and locate their desired information with speed and satisfaction, without any physical interaction with other people. Think of it like augmented reality in reverse, where you hold the triggering object rather than the device.

Little did we know back then how well this type of solution could work post-pandemic. As we emerge from lockdown and live activations begin once more, a major consideration will become contactless, staff-less demos that can still deliver layers of detail and gratification.

(Image: Developer view of object recognition).

Replacing humans with AI is a topic of much contention, but it’s hard to argue with the benefits. Retail is leading the way, where the Bentley Inspirator interprets your emotional reaction to different sensorial stimuli then recommends a car configuration, or Amazon Go which automatically tracks your shopping. Computer vision will certainly become commonplace at trade shows and within office spaces in the not too distant future too.